1 April 2025

AI Guidance for Health Practitioners in Australia

AI Guidance for Health Practitioners in Australia

AI is revolutionising healthcare, offering exciting opportunities to enhance patient outcomes, streamline workflows, and improve operational efficiency. So much so that the global AI-in-healthcare market has experienced substantial growth, increasing from $1.1 billion in 2016 to $22.4 billion in 2024, marking a 17x rise.

But what does this mean for healthcare professionals? As AI evolves, it provides tools for everything from diagnostic support to administrative tasks, leading to more effective care.

However, key questions arise: How do we ensure AI is used ethically and responsibly? This guide explores AI's role in healthcare and how it can be a boon to efficiency, reach and standard of patient care. It’s also important to know its possible shortcomings, like data privacy and the need for monitoring, giving healthcare professionals all the information they need to use AI effectively.

AI's Role in Healthcare

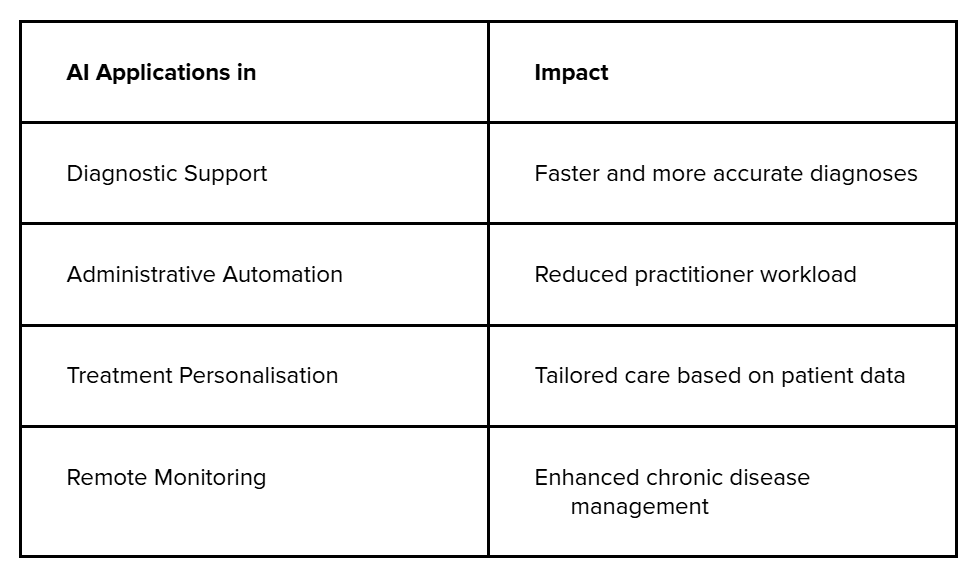

Artificial Intelligence is rapidly transforming the healthcare landscape by enhancing care delivery, improving patient outcomes, and optimising operational efficiency.

These AI applications not only improve specific areas like diagnostics and administrative tasks but also create a cohesive system that bridges gaps in care delivery. Let’s explore how AI directly enhances health outcomes and streamlines efficiency in healthcare.

1. Enhancing Health Outcomes

AI also supports mental health practitioners by:

- Improving Accuracy: AI tools in recent years can identify diseases with up to 90% accuracy, reducing diagnostic errors.

- Faster Decision-Making: Immediate analysis of large data sets accelerates critical decisions.

- Enhancing Monitoring: Continuous tracking devices alert practitioners to potential risks, like heart rate irregularities.

2. Streamlining Efficiency

AI automates routine tasks like patient record management, enabling practitioners to focus on direct care.

- Reducing Burnout: Practitioner burnout is a growing concern, with plenty of Australian doctors reporting stress from administrative burdens. AI alleviates this stress by handling time-consuming tasks like appointment scheduling and documentation.

- Improving Diagnostic Accuracy: AI tools like Google's DeepMind have achieved high accuracy in detecting eye diseases, surpassing human-only evaluations. This precision allows for earlier interventions, improving patient outcomes.

- Optimising Treatment Planning: AI platforms like PractaLuma also analyses patient data to recommend optimal therapies.

- Supporting Healthcare Delivery: Solutions like those from PractaLuma also enable healthcare providers to integrate AI tools seamlessly into their practice, enhancing patient experience and operational efficiency.

While AI enhances efficiency and precision in healthcare, its integration comes with important responsibilities for practitioners to ensure ethical use and positive outcomes. Let’s explore these responsibilities next.

Practitioner Responsibilities with AI

As a healthcare professional, you must understand that while AI can enhance your practice, you remain ultimately responsible for its use and the outcomes it generates.

1. Accountability for AI Use: As a health practitioner, you remain fully responsible for the integration and outcomes of AI tools in your practice. AI should be viewed as a supportive tool, not a decision-maker. You are legally and ethically accountable for any medical decisions made, whether assisted by AI or not.

2. AI as a Supplementary Tool: It is essential to remember that AI is designed to enhance—not replace—human expertise. While AI can offer data-driven insights, your clinical judgment and experience should guide the final decision, especially in complex cases.

3. Critical Evaluation of AI Recommendations: AI tools, such as those provided by PractaLuma, can analyse vast amounts of patient data and generate recommendations. However, you must assess these recommendations critically. Ensure they align with your professional knowledge, patient history, and clinical context.

4. Understanding AI Limitations: Every AI system has limitations, including biases in data, errors in predictions, or misinterpretations of patient conditions. Familiarise yourself with the specific functionalities and risks of the AI tools you use, ensuring they are appropriate for your practice.

5. Continual Professional Development: Stay informed about the latest advancements in AI and its applications in healthcare. This includes ongoing training in the use of AI tools to ensure you are up to date with best practices and regulatory requirements.

Healthcare professionals must oversee AI operations to fulfil these professional responsibilities. Let’s move on to how you can supervise and evaluate the use of AI within healthcare.

Human Oversight and Evaluation

As a healthcare practitioner, it is your responsibility to ensure that AI-generated information is accurate, relevant, and appropriate for your patient’s specific conditions.

1. Ensuring Accuracy and Relevance: AI tools, like those offered by PractaLuma, can analyse vast amounts of patient data to generate recommendations. However, it’s essential to cross-check these insights with your clinical judgment and patient history to ensure they align with the current healthcare context.

2. Regularly Assessing AI Functionality: AI tools are constantly evolving, and new updates or versions may introduce changes in functionality. Continuously evaluate these tools to ensure they are working as intended and are still relevant to your practice's needs.

3. Identifying Potential Risks: AI is not infallible—mistakes can occur, such as data misinterpretations or incorrect recommendations. Take time to understand the risks associated with the AI tools you use and be vigilant about verifying any AI-generated information before acting on it.

4. Critical Engagement with AI Outcomes: While AI can automate and suggest potential treatment plans or diagnostic routes, it should never replace critical thinking. Review AI suggestions carefully, considering any limitations or biases in the data it relies on.

5. Promoting Safe and Effective Use: Practitioners must remain proactive in understanding AI's limitations. This includes ensuring AI tools are used within the scope of their capabilities and making adjustments when necessary to safeguard patient well-being.

Now, let’s explore how patient communication and obtaining informed consent when integrating AI into healthcare should be important to your practice.

Patient Communication and Consent

As a healthcare practitioner, it is your responsibility to inform patients about the use of AI in their care. Transparency is key in ensuring that patients understand how AI may influence their diagnosis, treatment plans, or overall healthcare experience. This is why patient transparency stands out:

1. Clear Communication: Explain how AI is being used to support patients' treatment, whether it's diagnostic assistance, treatment planning, or patient management. Patients should have a clear understanding of how AI interacts with their care process.

2. Informed Consent: Obtaining informed consent is a critical part of the process. Ensure that patients are not only aware of the role of AI but also understand any risks involved. Document consent thoroughly to meet regulatory and legal standards, keeping a record of their understanding and agreement.

3. Continuous Dialogue: Consent is not a one-time process. It is essential to maintain ongoing communication with your patients, particularly if the scope of AI usage in their care changes over time.

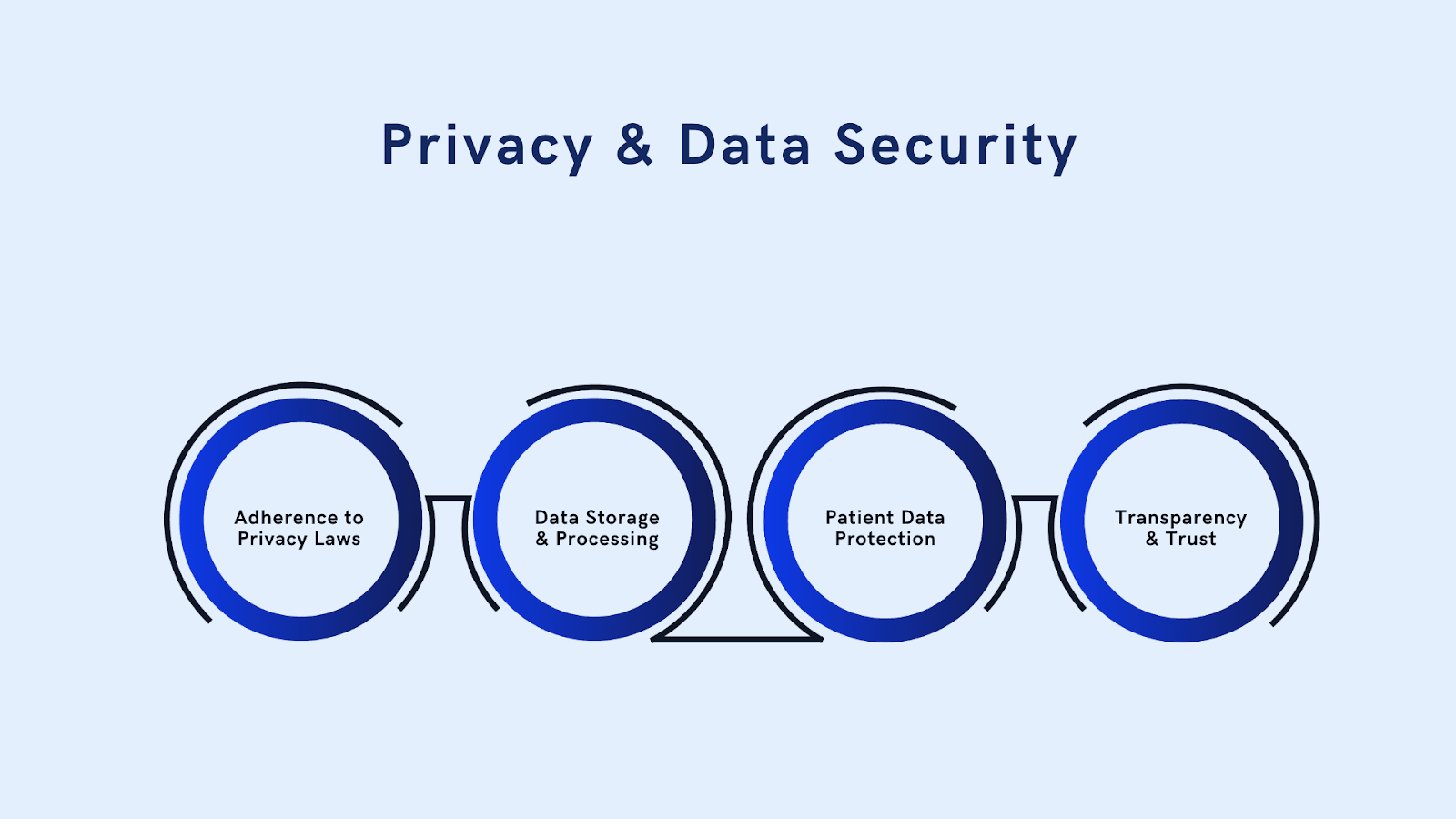

Privacy and Data Security

When it comes to Privacy and Data Security, AI's role in healthcare introduces concerns, particularly as these tools often require access to sensitive personal health data.

As a practitioner, it is crucial to ensure that any AI tools used in your practice comply with strict privacy and security regulations.

1. Adherence to Privacy Laws: In Australia, compliance with the Privacy Act 1988 is essential. This law governs how personal health information should be handled, stored, and protected. Ensure your practice follows these guidelines when using AI tools.

2. Data Storage and Processing: Be proactive in verifying whether the AI tools you use to store or process data in a manner that complies with privacy regulations. Always check the terms and conditions of any AI platform you integrate into your practice to ensure patient data is securely handled.

3. Patient Data Protection: Safeguarding patient data must be a top priority. Implement robust cybersecurity measures and ensure that AI systems have secure data storage protocols in place. Regularly audit AI tools to assess any potential vulnerabilities and ensure patient confidentiality is maintained.

4. Transparency and Trust: Ensure your patients are fully informed about how AI tools are using their data. Transparent communication about how their information will be stored and shared builds trust and supports a positive patient-provider relationship.

Future Outlook and Industry Implications

The future of AI in healthcare is dynamic, with technology continuously evolving. As AI tools become more integrated into clinical practice, regulatory frameworks and industry standards will also adapt.

Healthcare practitioners must remain agile and ready to adjust to new rules and emerging best practices. Staying informed about the latest developments in AI ensures that your practice is well-equipped to handle changes in regulatory and professional expectations.

Conclusion

As AI transforms healthcare, Australian healthcare practitioners must embrace its potential while following ethical and regulatory guidelines to protect patient well-being. AI can enhance care, streamline workflows, and reduce administrative burdens, but it also requires transparency, data security, and informed decision-making. Ensuring human oversight and regularly evaluating AI systems allows healthcare professionals to maximise benefits while minimising risks.

Platforms like PractaLuma offer innovative AI solutions tailored to the Australian healthcare sector, helping you integrate AI seamlessly into your practice while ensuring compliance. Ready to enhance your practice with cutting-edge AI tools? Check out PractaLuma today to discover how our solutions can optimise patient care and improve clinical efficiency.

FAQs

1. What is qualified health AI?

A: Qualified health AI refers to AI systems approved to assist in healthcare tasks like diagnosis, treatment planning, and data management.

2. How can AI help reduce healthcare burnout?

A: AI reduces burnout by automating administrative tasks, such as patient data entry and scheduling, allowing practitioners to focus on patient care.

3. Is patient consent required for AI usage in healthcare?

A: Yes, patient consent is required. Patients must be informed about how AI will be used, and their consent must be documented.

4. What regulatory bodies oversee AI in Australia's healthcare?

A: The Therapeutic Goods Administration (TGA) regulates AI tools used as medical devices in Australia.

5. Can AI make medical decisions on its own?

A: No, AI should support healthcare practitioners, but the ultimate decision-making responsibility remains with the practitioner.

6. How do I stay updated with AI regulations in healthcare?

A: Stay informed through updates from the TGA, attend training, and review industry guidelines regularly.